Why survey creators need to pay more attention to the details of wording, question types and other matters that not only affect results but also how customers view the company. A recent survey from Sage Software had quite a few issues, and gives me the opportunity to share some pointers.

The survey was for follow up satisfaction after some time with a new version of ACT! Call me a dinosaur, but after experiments with various online services, I still prefer a standalone CRM. Still, this post isn’t really about ACT! – I’m just giving a little background to set the stage.

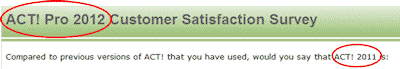

- The survey title is ACT! Pro 2012 Customer Satisfaction Survey. Yet one of the questions asks the survey taker to compare ACT 2011 with previous versions. How dumb does this look?

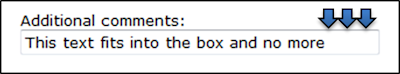

- This same question has a text box for additional comments. The box is too small to be of much use, but also the box can’t be filled with text. All the text boxes in the survey have the the same problem.

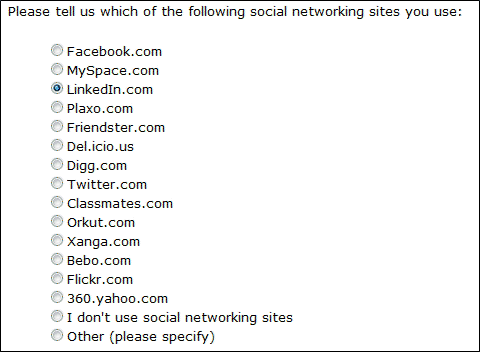

- If you have a question that should be multiple choice, set it up correctly.

Some survey tools may use radio buttons for multiple choice (not a good idea), but this isn’t one of them. This question should either be reworded along the lines of “Which of these is the most important social networking site you use“, or – probably better – use a multiple choice question type. - Keep up to date.

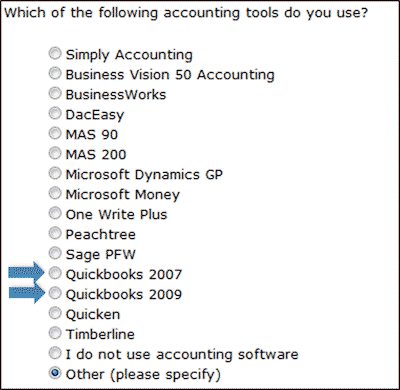

What happened to Quickbooks 2008, or more recent versions? It would have been better to simply have Quickbooks as an option (none of the other products had versions). If the version of Quickbooks was important (I know that integration with Quickbooks is a focus for Sage) then a follow up with the date/version would work, and would make the main question shorter. - There were a couple of questions about importance and performance for various features. I could nitpick the importance question (more explanation about the features or an option something like “I don’t know what this is” would have been nice), but my real issue is with the performance question. 20 different features were included in both importance and performance. That’s a lot to keep in mind, so it’s good to try to make the survey taker’s life easier by keeping the order consistent between importance and performance. The problem was that the order of the performance list didn’t match the first. I thought at first that the lists were both randomized separately, instead of randomizing the first list and using the same order for the second. This is a common mistake, and sometimes the survey software doesn’t support doing it the right way. But after trying the survey again, I discovered the problem was that both lists were fixed orders, different between importance and performance. Be consistent. Note, if your scales are short enough, and if you don’t have a problem with the survey taker adjusting their responses as they think about performance and importance together (that’s a topic of debate among researchers) you might consider showing importance and performance together for each option.

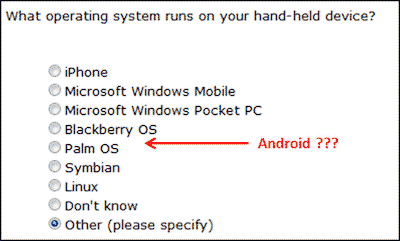

- Keep up to date – really! The survey asked whether I used a mobile computing device such as a smartphone. But the next question asked about the operating system for the smartphone without including Android. Unbelievable!

There were a few other problems that I noted, but they are more related to my knowledge of the product and Sage’s stated directions. But similar issues to those above occur on a wide variety of surveys. Overall, I score this survey 5 out of 10.

These issues make me as a customer wonder about the competence of the people at Sage. A satisfaction survey is designed to learn about customers, but should also create the opportunity to make the customers feel better about the product and the company. However, if you don’t pay attention to the details you may do more harm than good.

Idiosyncratically,

Mike Pritchard

Leave a Reply